Tests and Sensibility

Surprisingly, some devs don’t test

It is a truth universally acknowledged that software should be built on top of good practices (or should be well tested)

I can imagine Jane Austen saying that, if she were a software developer these days.

And yet it is still common to find that it is not true.

You can imagine my surprise when a few years ago I ran into the most surreal conversation.

At the time I was working in a famous Hedge Fund with billions of dollars under management (let’s call it the Smile Fund) and talking to a developer with at least 20 years of experience in C++, Java and probably a few other languages (let’s call him Bill).

As I was looking at possible modifications of some of the code he had written, I couldn’t find where it was being tested. I asked tactfully if he could point me to the related testing code so that I could make sure I wasn’t going to break any expected behaviors. Much to my surprise he told me he didn’t have any tests written for it.

“Why do I need tests for it. I wrote it, it works, and it’s in production! “

I was still early in my career at the time, and I was a little concerned by the answer. Can someone with that much experience think that tests are optional ? What am I missing?

He wasn’t shy about providing explanations for it, mostly revolving around a few of his convictions:

- It worked when I tested it

- Testing takes too long

- we have a QA team, so testing is their job

- if it didn’t work, the trading floor would have complained by now

They are not explanations, they are (poor) excuses.

Let’s look at them one by one:

It worked when I tested it

Bill is basically saying that he ran the code through a lot of examples (evidence?) and decided that the output was fine

First of all… if he did all the “manual running of the code with examples” that he described, it seems a bit of a waste to not have captured all of that effort in a unit test.

But let’s not focus on that for now.

Let’s instead bring our attention to the fact that he tested it

- at one point in time

- on his development computer

The fact that it worked when he tested seemed to reassure him enough to not require “proper” unit testing.

The limitation of that argument is that the validation he allegedly took time to perform will never again be performed by him or anyone else. So we have a point-in-time validation that cannot be reproduced.

This is the kind of situation that you encounter when, after the discovery of a bug, the developer whines “but it worked when I tested it!”.

Testing once is not enough, unit tests are there to constantly reassure us that everything is still running as intended

One more thing… “it worked on his development computer”.

Different environment variables, OS versions, tool versions, amounts of RAM, CPU speed, dependencies versions, cause programs to run differently? Even forgetting to commit one file that exists in my local computer, but nobody else in the company has, can cause the most well crafted software to not build or run in production environments and in other developer’s systems.

Having unit test being run as part of CI (Continuous Integration) on a system that mimics the specs of the deployment environment is the best way to validate a program even before it’s allowed to merge into the development mainline, let alone deploy to production.

Testing takes too long

That’s a classic :)

It is human nature to discount the value of future time in favor of the present.

We seem to disproportionately value our ability to make apparent progress now, even at the expense of significant delays in the future.

According to an IBM study, the cost of detecting and fixing a bug increases exponentially with time. The earlier you find it, the better.

An hour spent writing proper tests will probably save you a few days of reproducing a strange behavior that’s ovserved in production before you even start looking for and implementing a solution. And that’s not even counting the impact to your (the developer) credibility and karma. Do you really want people to know you for your perfect skills, or for the office meme of “is it Bill again?”

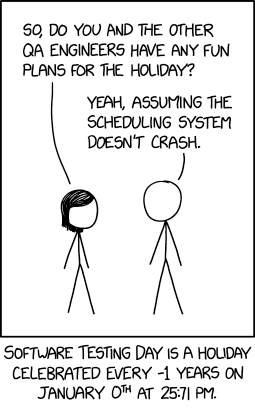

We have a QA team

Do we really?

Most companies, especially small ones and startups don’t actually have a QA team. But when they do they tend to focus on end-to-end, or user-experience testing, and if they find a problem with the overall behavior of the system, it will take some time, and luck, to discover the problem if it even manifests itself in the current usage.

But even in the unlikely scenario that we did have a group of code ninjas babysitting us and making sure that every class we churn out works as expected, do we really need to waste their time by writing without worrying if our code works or not? If we are not involved in the creation of code that is easy to test, we’ll write abominations that are difficult to test and hardly reusable. It’s a waste of resources on multiple fronts.

One more note about why that scenario is unlikely: for that to work we would be looking at a development process that requires exact specifications and requirements of every small piece of code, that is agreed upon by developers and QAs. Who has the budget for that?

In reality what “Bill” was referring to was most likely our trading floor, where the traders, moving million of dollars at a time, were quite well versed in (and used to) finding problems with our department’s code.

Way to establish trust and reputation…

And that brings us to the last point. Keep reading.

If it didn’t work they would have found out by now on the trading floor

Compacency kicks in if your code hasn’t revelaed itself to be broken. Even things that seem to be working well for a while might be harboring within themselves the seeds of disaster. Things could be running smootly for months before you accidentally hit a division by zero, or a null pointer, or a multi-threading issue of an initialized variable (oh the horror!).

Can you imagine the amount of time (did I mention the embarrassment? I think I might have) that it will take to fix if a bug manifests itself weeks later!

That is why testing is so important in the first place. As you write tests, you get a chance to poke holes in what you have written.

You can step back for a second and make sure that all usages of your code work and are safe to use, not just the two cases you already used it in :0) . That’s why testing is a very different mindset from developing, and it requires you to look at your code with fresh eyes, no assumptions and see in which ways you can break it.

Testing, why we do it

So far we’ve mostly rebuked some engrained ideas, but if we wanted to think about it from a clean-slate perspective… why do we test? When people ask us: “why should I spend the time”, what do we answer?

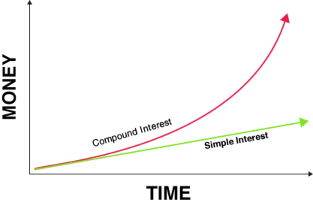

A financial analogy

Let’s look at it from a financial perspective: when we borrow money we pay interest for as long as we have outstanding debt. If we never repay the debt, we keep paying interest. If we don’t pay the interest, it accumulates as more outstanding debt and it causes what’s called compounding interest, which leads to an exponential growth of how much you owe.

Let’s look at it from a financial perspective: when we borrow money we pay interest for as long as we have outstanding debt. If we never repay the debt, we keep paying interest. If we don’t pay the interest, it accumulates as more outstanding debt and it causes what’s called compounding interest, which leads to an exponential growth of how much you owe.

Compare that to our technical progress: you can borrow against the future by not doing something that you should be doing (the right thing) and that’s called in fact “technical debt”. Just like money debt, you keep paying interest for it in terms of time spent fixing problems that could have been avoided. If you don’t fix them, they compound, and you have compounding technical debt. The sooner you repay your debt, the less you’ve spent repaying.

What’s even better than repaying your debt quickly? Not borrowing it in the first place! But we can’t always afford that, so as long as you’re aware of how much you’re borrowing and as long as you budget for it to be returned soon, then it might be a reasonable way to deal with a temporary limitation in resources, or pressing timelines.

It’s interesting that IBM’s study found that the cost of tech debt grows exponentially, just like money debt ;)

Your Insurance Policy

Writing unit tests for a piece of software is like building an insurance policy against future failure. You don’t test just once, you set up a mechanism that will allow anyone to verify that the code is in good working order. That’s a big difference! The reproducible verification can happen in CI on every build, every other developer in the team can make sure that everything works in their environment as well.

But even more importantly, if anyone (you included) decides to make changes to your implementation for whatever reason, now they have a suite of tests that can instantly let them know that the changes are good. Your code is protected against future changes not only in its own code, but in its dependencies. Imagine one of your libraries slightly changing behaviour on some corner case: your tests could find out before the upgrade is released in production.

Likewise, when other devs’ code is well tested, you know you can make changes anywhere and still know that you haven’t introduced problems, and you haven’t misunderstood the expectations of the original piece of software. It frees you from the responsibility of re-checking everybody else’s code to make sure that things “still work”. When someone uses your code and tests their code, you will know if any changes you later make have accidentally broken usages and expectations of your code.

This freedom to modify is also what allows a team to improve things with no fear, move faster and try new approaches and rework code for performance, readability or just reusability. That is what a high performance team can do, but it only works when you’ve invested in that protection that prevents unwanted consequences. How many times have you tried to improve some code, just to find out that there’s too much risk of introducing problems that you won’t find out about until much later?

Trusted Components

Once you have well-tested pieces of code, they become building blocks that can be reliably used by anyone in the team.

Those building blocks help create more well-tested pieces of code: you’re re-using already-tested blocks, and you only need to test the new code.

For example, we don’t usually re-write basic containers: the language we use typically has a pretty good implementation of basic containers, which is also well documented, well tested and produces clear error messages.

As users of those well-built components we appreciate how we can blindly trust them.

On the other hand, if we can’t trust what our team has written, we’ll end up rewriting it (and re-inventing the wheel). That causes even more technical debt because now we have two portions of our code that do the same thing (or a dangerously similar thing) but each with its own quirks and … bugs.

Why aren’t we as enthusiastic when it comes to re-using code internally? There is often an issue of trust between teams and developers, which leads to working more and creating more code. In a standard life of rushed development under pressure, that new code also ends up mostly untested, leading to further

The Road Ahead

It takes discipline, but it pays off quickly:

- You can develop new code, test it and know that it’s ready.

- You can modify old code and know that your changes didn’t break it

- You can release and deploy faster, and that’s the irony when you hear people complain that they “don’t have time for testing”.

Surprisingly to most, spending time testing code, makes your development faster down the line, with less time spent debugging and rolling back releases, faster time to deployment for new features, and overall higher developer productivity.

Once again that financial analogy comes in handy: you are making an investment that will pay dividends in the future.

Invest wisely and get rich! ;)